You did everything right with your website. But you're not making any more money.

- You worked out a clear strategy for your visitor funnel

- You pored over copy and agonised over calls to action

- You built reports in Google Analytics to check it was working as expected

- You've experimented with changes and compared results in Google Analytics to see whether your changes had made a difference.

But after several months of making incremental improvements. The bottom line numbers haven't moved. What's going on?

Well, its possible that the improvements you measured were not statistically significant. That link will take you to a Wikipedia page that gets a bit, well... maths-y. Alternatively, keep reading. Here's what you need to know.

There's a chance that the changes you made did absolutely nothing, and that improvement you measured was just a random fluctuation in user behaviour, or caused by something else like the weather.

So, how do you know whether you really made a difference? The answer comes down to how much traffic you have. Let's look at some numbers:

[styled_table]

| Before Your Change | After Your Change | |

| Visitors | 1000 | 1000 |

| Conversions | 50 | 54 |

| Conversion Rate | 5% | 5.4% |

[/styled_table]

That seems pretty clear, right? Traffic stayed the same, but 4 more people converted on the second version of the site.

But in this instance, your results are not statistically significant and you shouldn't read too much into them. You might want to stick with the changes that you've made for personal and creative reasons. But don't expect that doing this over and over will move you forward.

Why Not?

Let's imagine that you could show the first version of your site to everyone that ever lived. How many of them would convert? Probably something like 5%, because we did actually show it to 1,000 people and 5% of them did convert. But the result for the entire human race might be 5.1% or 4.9%. It could also be 2.2%, and there's a very small chance it would be 75%. We don't know for sure but there's a reasonable chance that the particular 1,000 people we did test it on were fairly normal and not outliers.

So lets imagine we don't make any changes, we just go out and find another 1,000 people to show our site to and at the end of that process, the conversion rate for that group was 5.4%. That could happen right?

Exactly.

That second 1,000 people we measured might just have a random difference in the conversion rate.

When you crunch those numbers, there's actually a 33% chance of that happening. So when we measured the impact of our changes above, there was a one in three chance that our changes weren't the reason for the difference. If you go through this process three times there's a very good chance you've seen encouraging results for changes didn't help at all.

Doing it Right

The key is to test the changes on a big enough group and get a big enough difference in the conversion rates to be 95% certainty that its not a fluke. If you're not a budding statistician there are tools that can work this out for you. Next time you run a test on your website. Head over to this site and enter the visitor & conversion numbers in there to check whether your results are significant:

http://getdatadriven.com/ab-significance-test

If you do want to get into the details, checkout this answer on Quora and the Wikipedia pages on Z tests.

What you'll find is that it's very hard to get a statistically significant result if you don't get a huge amount of traffic to your site. You can increase your numbers by running tests for longer periods of time, but ultimately, if you have a low traffic site, then your efforts are better directed on getting more traffic than on experimenting on the site itself.

One more thing...

Scientists will be screaming at me right now because I've kind of assumed that you're measuring the conversion rates on a site, then making some change that everyone sees and then taking another measurement. That's quite a bad idea.

The problem here is that peoples' behaviour changes over time. The economy changes, the weather changes, your competitors change, lots of stuff could be responsible for the change in your numbers.

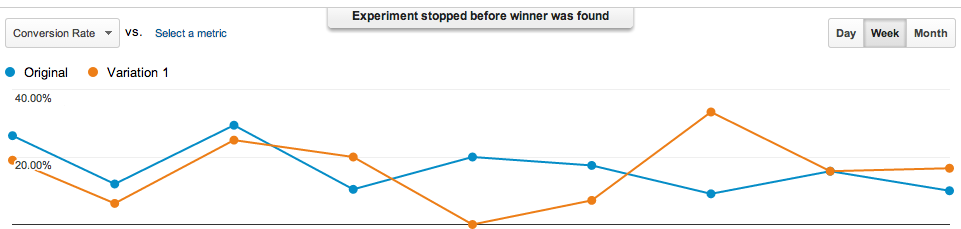

To really do this right you need to run an A/B Test. Which means you need to randomly show each person one or other version of the site over the same time period. That way you know that differences are not because of some external factor.

Google Analytics has tools to help you do this. Checkout their Content Experiments feature. This allows you to set up two versions of a particular page; Google will then take care of which version people see and will even measure the results for you.

Steadily improving the performance of your site is immensely powerful. Over time, every small step forward that you make will start to compound and make a real difference - just check you really are moving forward and not chasing your tail.